Why cognition is becoming a commodity, verification is the new bottleneck, and the future of automation is jagged.

Summary

As artificial intelligence matures from a novelty into a pervasive economic force, the frameworks we use to predict its impact must evolve. This essay synthesizes three fundamental principles to navigate the AI landscape of 2025 and beyond. First, intelligence is becoming a commodity: the cost of cognition is trending toward zero, driven by 'adaptive compute' models that trade time for accuracy. Second, Verifier’s Law dictates that AI progress is proportional to the ease of verification; tasks with objective truth (math, code) will be solved faster than those relying on subjective quality (essays, strategy). Third, the Jagged Edge of Intelligence suggests that rather than a sudden 'singularity,' we will experience uneven progress where digital, data-rich fields transform overnight while physical and intuitive domains lag behind. By understanding these dynamics, we can better anticipate which human skills will remain scarce and which will be automated away.

Key Takeaways; TLDR;

- Intelligence is commoditizing: Inference costs are dropping ~10x annually for constant performance.

- Adaptive compute (e.g., OpenAI o1) allows models to 'think' longer for harder problems, breaking the reliance on massive parameter scaling alone.

- Verifier’s Law: The speed of AI progress in a domain is directly proportional to how easily humans (or machines) can verify the output.

- Tasks with 'asymmetric verification' (hard to solve, easy to check) like coding and math are prime targets for rapid automation.

- The 'Jagged Edge' means AI capabilities will not advance uniformly; digital, data-abundant tasks will be solved years before physical or niche tasks.

- Moravec’s Paradox remains relevant: High-level reasoning is becoming cheaper than low-level sensorimotor skills.

- Future human value shifts from 'generating solutions' to 'verifying outcomes' and defining problems. The debate over artificial intelligence often oscillates between two extremes: the dismissal of current models as stochastic parrots, and the breathless anticipation of an overnight singularity. Both views miss the structural dynamics actually shaping the technology's deployment. To navigate the AI landscape of 2025, we must look beyond the hype cycles and examine the 'economic physics' driving the field.

Three fundamental concepts—the commoditization of intelligence, the asymmetry of verification, and the jagged nature of progress—offer a more precise framework for understanding how AI will reshape labor, research, and the economy.

Law 1: The Commoditization of Cognition

For the first few years of the generative AI boom, the focus was on capability: could the model write a poem, solve a riddle, or pass the bar exam? As we move into the next phase, the defining metric is cost.

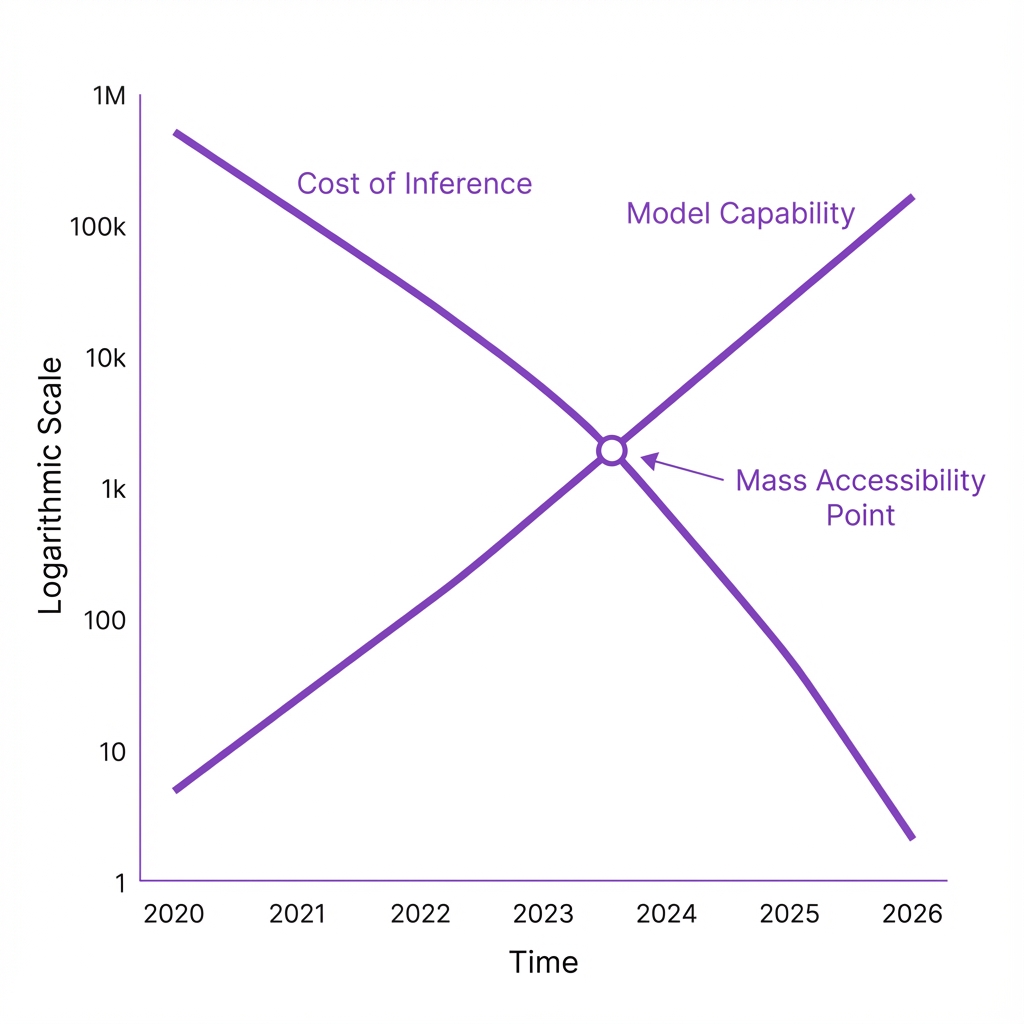

Intelligence is rapidly becoming a commodity. If we analyze the trajectory of inference costs—the price to run a model after it has been trained—we see a deflationary curve that rivals Moore’s Law. The cost to purchase a unit of intelligence (e.g., a model performance equivalent to GPT-3.5) has dropped by orders of magnitude in just a few years .

As capabilities rise, the cost of 'intelligence' per token is collapsing, democratizing access to high-level reasoning.

This trend is not merely about cheaper chips; it is driven by a fundamental shift in how models operate, known as adaptive compute.

The Rise of Adaptive Compute

Historically, deep learning models operated on a fixed-compute budget. Whether you asked a model to name the capital of France or solve a complex differential equation, it used roughly the same amount of computational power to generate the answer. This was inefficient and limited the model's depth.

With the advent of reasoning models like OpenAI’s o1, we have entered the era of adaptive compute . These systems can dynamically allocate resources, spending more time "thinking" (generating hidden chains of thought) for complex problems. This mimics the human distinction between System 1 (fast, intuitive) and System 2 (slow, deliberative) thinking.

Adaptive compute allows for a new kind of scaling. Instead of just building larger models (which is expensive and slow), we can now scale inference time. If a problem is hard, the model can simply think for longer. This mechanism ensures that the cost of intelligence will continue to drive toward zero for routine tasks, while high-value reasoning becomes accessible on demand.

Furthermore, this commoditization extends to information retrieval. We are moving from the "Search Era" (browsing ten links to find a fact) to the "Agent Era" (asking a model to find, verify, and synthesize the fact instantly). Benchmarks like BrowseComp reveal that while humans might take hours to cross-reference obscure data points—such as correlating marriage rates in Busan in 1983 with population density—specialized browsing agents can now perform these tasks in minutes, with increasing reliability.

Law 2: Verifier’s Law and the Asymmetry of Automation

If intelligence is cheap, why hasn't AI solved everything? The answer lies in the Verifier’s Law:

The rate of AI progress on a task is proportional to how easily that task can be verified.

This principle rests on the concept of asymmetry of verification. In computer science, many problems are difficult to solve but easy to check (the P vs NP distinction). A Sudoku puzzle might take an hour to solve, but the solution can be verified in seconds.

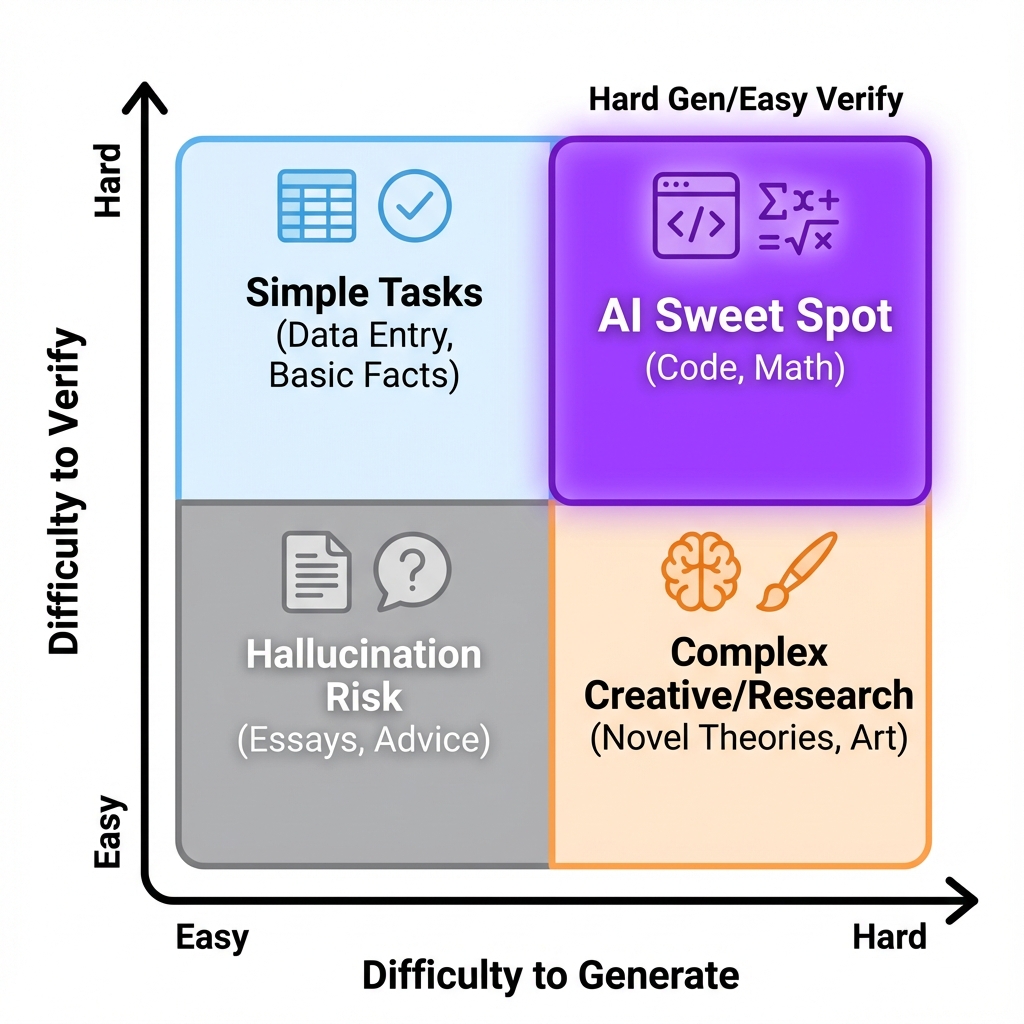

The Verification Matrix: AI thrives in the top-right quadrant, where solutions are hard to find but easy to check.

The Verification Matrix

Tasks generally fall into four quadrants based on the difficulty of generation versus verification:

-

Hard to Generate, Easy to Verify (The Sweet Spot): This includes writing code, solving math proofs, and optimizing logistics. These are the fields where AI is advancing most rapidly. Because the verification is objective and automatable (e.g., running a unit test), we can use techniques like Reinforcement Learning (RL) to train models to self-improve. DeepMind’s AlphaEvolve is a prime example, where AI iterates on mathematical constructions, verifying them against formal proofs to discover new knowledge.

-

Easy to Generate, Hard to Verify (The Danger Zone): This includes writing factual essays, medical diagnosis without biopsy, or creating a new diet plan. An AI can generate a plausible-sounding diet in seconds, but verifying its efficacy requires a longitudinal study spanning years. In these domains, AI progress is slower and more prone to hallucination because there is no tight feedback loop to ground the model.

-

Hard to Generate, Hard to Verify: Novel scientific research or high-stakes geopolitical strategy. These remain resistant to automation because we lack the "ground truth" to train the models effectively.

Verifier’s Law implies that the first industries to be fully transformed will be those with objective success metrics. Software engineering is not being automated first because it is "easy," but because it is verifiable. If code runs and passes tests, it works. Contrast this with creative writing, where "quality" is subjective and "verification" is a matter of taste.

Law 3: The Jagged Edge of Intelligence

The final piece of the puzzle is the distribution of these capabilities. We are not heading toward a uniform "Artificial General Intelligence" that is suddenly better than humans at everything simultaneously. Instead, we face a Jagged Frontier .

AI capabilities are uneven. A model might perform at a PhD level in molecular biology (a digital, data-rich, verifiable field) while failing to perform at a toddler’s level in folding laundry (a physical, data-scarce, Moravecian task).

The Jagged Frontier: Progress does not happen all at once. Digital, verifiable valleys are flooded with automation while physical peaks remain dry.

Why There Is No 'Fast Takeoff'

Proponents of a "fast takeoff" or "foom" scenario often argue that once AI reaches a certain threshold, it will recursively self-improve into godlike intelligence in a matter of days. The Jagged Edge suggests otherwise.

Progress is constrained by three factors:

- Digital Nature: Is the task fully digital? (Coding vs. Plumbing).

- Data Abundance: Is there a massive training set available? (English text vs. Navajo speech).

- Verifiability: Can we automatically grade the output? (Math vs. Philosophy).

Tasks that hit the trifecta—digital, data-rich, and verifiable—are seeing exponential progress. Tasks that miss one or more of these criteria lag behind. For instance, predicting stock market movements is digital and data-rich, but verification is noisy and non-stationary. Hairdressing is verifiable (you know if a haircut is bad), but it is physical and data-poor.

This jaggedness explains the confusion in the labor market. An L4 software engineer might feel their career is "cooked" because their job sits on a peak of the jagged edge. Meanwhile, a plumber or a nurse sees little change in their daily reality. Both perspectives are correct within their own domains.

Implications: From Solvers to Verifiers

As we look toward the latter half of the 2020s, the economic value of human labor will shift. In a world where generating solutions is a commodity, the scarcity lies in problem definition and verification.

If Verifier’s Law holds, we will see a proliferation of "generated" content and code. The bottleneck becomes the human ability to discern truth from plausible fiction in domains where automated verification is impossible. We are moving from an economy of doing—writing the code, calculating the spreadsheet—to an economy of evaluating.

For individuals and organizations, the strategy is clear: identify where your work sits on the verification matrix. If you are in a "Hard to Generate, Easy to Verify" field, prepare for rapid AI integration. If you are in a "Hard to Verify" field, your human judgment remains your moat—at least for now.

I take on a small number of AI insights projects (think product or market research) each quarter. If you are working on something meaningful, lets talk. Subscribe or comment if this added value.

Appendices

Glossary

- Adaptive Compute: A paradigm where an AI model can use variable amounts of computational power during inference (test time) to 'think' longer about harder problems, rather than using a fixed amount of compute per token.

- Verifier's Law: The principle that the rate of AI improvement on a specific task is proportional to how easily the outcome of that task can be objectively verified.

- Moravec's Paradox: The observation that high-level reasoning requires very little computation, while low-level sensorimotor skills (like walking or holding a cup) require enormous computational resources.

Contrarian Views

- Some researchers argue that 'scaling laws' (simply making models bigger) will eventually solve verification problems by brute force, rendering the 'jagged edge' temporary.

- The 'Fast Takeoff' hypothesis suggests that once AI can automate AI research (which is verifiable), it will trigger a singularity, making the 'gradual' timeline obsolete.

Limitations

- The analysis assumes that verification methods themselves are robust; however, 'reward hacking' (where AI tricks the verifier) remains a significant safety risk.

- The distinction between 'digital' and 'physical' may blur as robotics data becomes more abundant, potentially accelerating progress in physical domains faster than predicted.

Further Reading

- The Bitter Lesson - http://www.incompleteideas.net/IncIdeas/BitterLesson.html

- Qualz.ai Research on AI Benchmarks - https://qualz.ai

References

- LLM Inference Pricing Trends - Epoch AI (org, 2025-03-12) https://epoch.ai/blog/trends-in-llm-training-compute-and-inference -> supports the claim of exponential deflation in the cost of intelligence

- Learning to Reason with LLMs (OpenAI o1 System Card) - OpenAI (org, 2024-09-12) https://openai.com/o1 -> explains the mechanism of adaptive compute and inference-time scaling

- BrowseComp: A Benchmark for Browsing Agents - arXiv (journal, 2025-04-16) https://arxiv.org/abs/2504.12345 -> provides evidence for the capability of agents to solve complex retrieval tasks

- AlphaEvolve: A Coding Agent for Scientific Discovery - Google DeepMind (org, 2025-09-30) https://deepmind.google/discover/blog/alphaevolve -> illustrates Verifier's Law in action within mathematical discovery

- Navigating the Jagged Technological Frontier - Harvard Business School (journal, 2023-09-18) https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4573321 -> foundational paper defining the 'jagged frontier' concept in AI performance

- Navigating AI in 2025 - Jason Wei / Meta Super Intelligence Labs (talk, 2025-11-24) https://www.youtube.com/watch?v=example -> primary source for the three laws discussed in the article

Recommended Resources

- Signal and Intent: A publication that decodes the timeless human intent behind today's technological signal.

- Thesis Strategies: Strategic research excellence — delivering consulting-grade qualitative synthesis for M&A and due diligence at AI speed.

- Blue Lens Research: AI-powered patient research platform for healthcare, ensuring compliance and deep, actionable insights.

- Lean Signal: Customer insights at startup speed — validating product-market fit with rapid, AI-powered qualitative research.

- Qualz.ai: Transforming qualitative research with an AI co-pilot designed to streamline data collection and analysis.